The rapid development of AI (Artificial Intelligence) and specifically Generative AI (ChatGPT being the most well-known example) has introduced a lot of new and exciting tools, but also further cluttered up an already confusing ‘alphabet soup’ of names and abbreviations related to AI, ML and Big Data sets.

At resolvent, we work a lot in the intersection between AI technologies and more “classical” Multiphysical Modelling, in this work, we frequently meet ambiguity related to how the terms and technologies relate to each other – a few examples:

- Is all AI also Machine Learning?

- Is ChatGPT a “broader form” of AI because it appears so capable?

- What is the difference between Reduced Order Modelling and Surrogate Modelling

This blog post seeks to pragmatically lay out our take on a terminology, that makes sense within our day-to-day work in engineering. This entails placing the concepts and terms in Figure 1(right) in meaningful relation to each other.

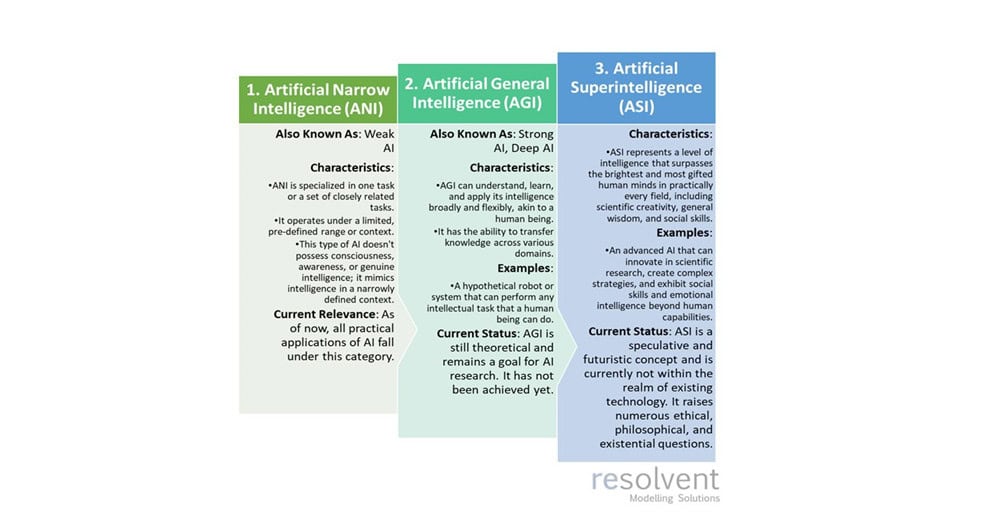

Types of Artificial Intelligence

At a high level, AI is sometimes categorized into 3 levels as seen in Figure 2.

At first glance it appears a bit hypothetical to consider even Level-2 the Artificial General Intelligence where the intelligence is “human-like”, but OpenAI (the company behind ChatGPT) has it as their stated mission to develop and market AGI in the future. If nothing else, this shows 2 things:

- However impressive the interactions with ChatGPT (And similar agents) can be, they are not human-like intelligence but rather refined “auto-complete machines”.

- There is currently a drive towards AGI (as evidenced by the inpour of funding OpenAI and its peers receive), so we might see something beyond the current Artificial Narrow Intelligence products in the future.

In the remainder of this post, “AI” will refer to Artificial Narrow Intelligence

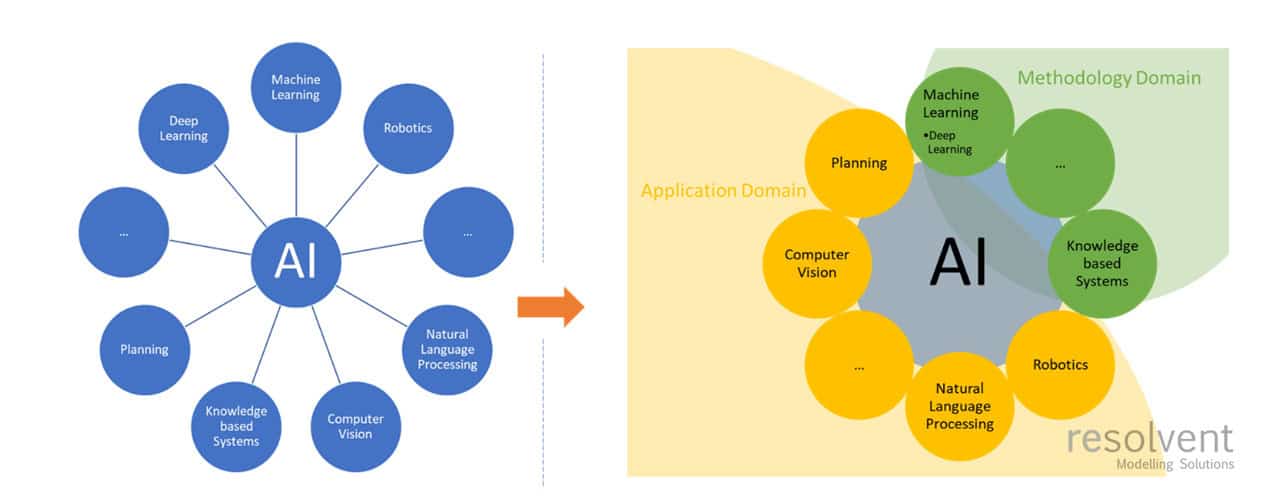

Fields within AI

The fields that “make up” AI are often represented like Figure 3 (left), where ie. Robotics and Machine Learning (ML) and Deep-Learning are mentioned side-by-side. We would make a distinction between Methodologies and Applications and consider Deep-Learning a subfield of ML as seen in Figure 3 (right). This distinction better communicates that Robotics might utilize methods from both ML and Knowledge Based Systems etc.

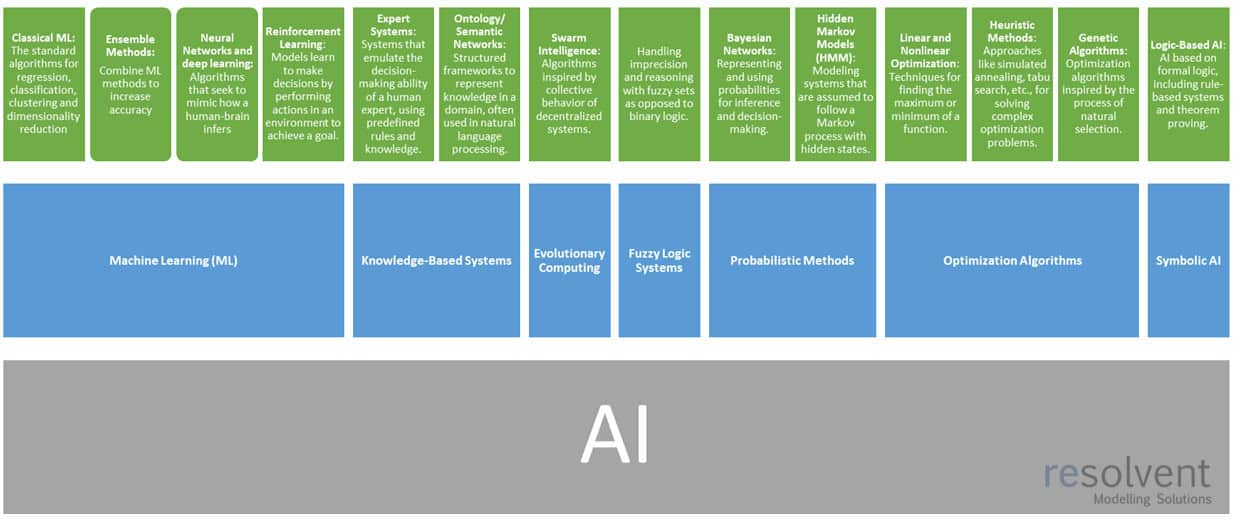

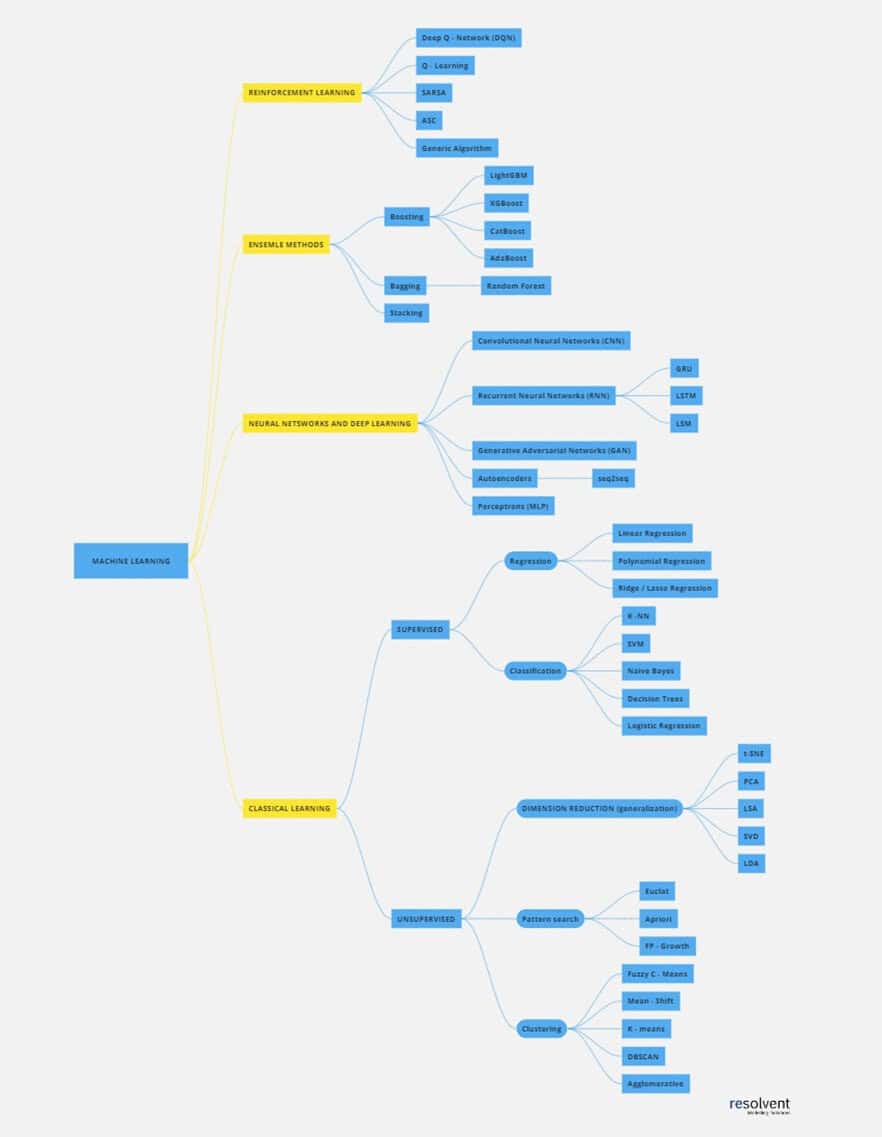

With a focus on the Methodology Domain, we can list (some of) the subfields as seen in Figure 4. Ultimately the exact distinction between methodologies will be somewhat subjective and incomplete, but this is a decent starting point.

This highlights that AI is not only ML but also a range of other fields, where we frequently also use various Optimization Algorithms and Probabilistic Methods in our line of engineering. However, for the remainder of this post, we will focus on Machine Learning as most of the concepts/terms we seek to anchor fall within this methodology.

Fields within Machine Learning

As indicated in Figure 4 ML can reasonably be divided into 4 categories. [2] quite nicely fleshes this out as seen in Figure 5.

The distinction between Classical Learning and Neural Nets and Deep learning is appealing: while clearly both ML categories, several of the Classical Learning-algorithms we have used daily on our laptops in ie Excel or Numpy without realizing we were in fact practitioners of AI😊 In contrast Neural Nets and Deep learning-algorithms typically require knowledge about NN structures and GPU-based hardware, that not all engineers have readily available.

For the purpose of this blog, we will not go into Reinforcement Learning nor Ensemble Methods and instead focus on reconciling AI with our work in Multiphysical simulation in the next section

Bringing it into the realm of Multiphysics simulation

At resolvent, we work extensively with numerical simulation of physical systems using (mainly) the Finite Element method, frequently in Comsol Multiphysics – this allows us to predict complex system behaviour that cannot easily be tested – an example is seen in Figure 6 where the fuel flow in an Electrolyzer system is modelled and pressure distribution, etc found.

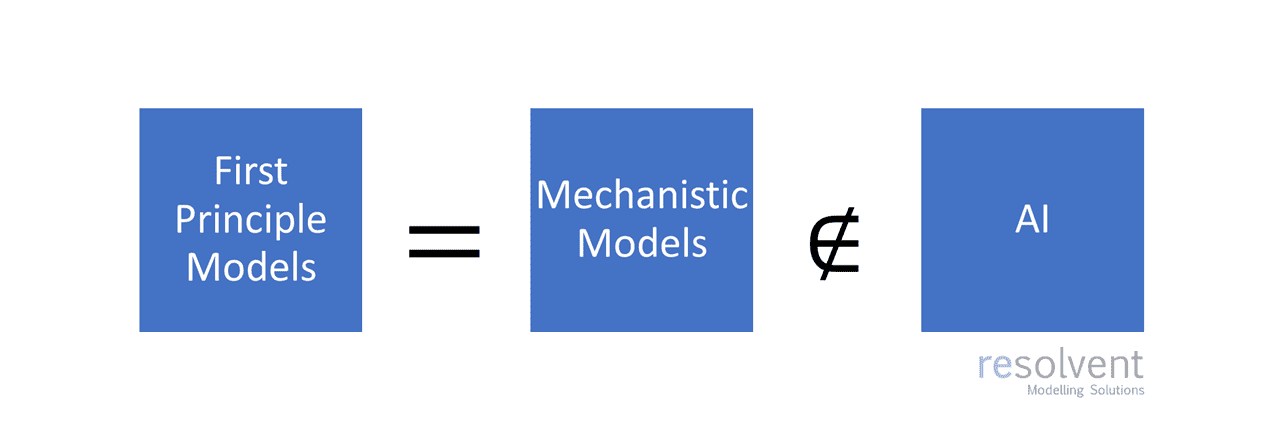

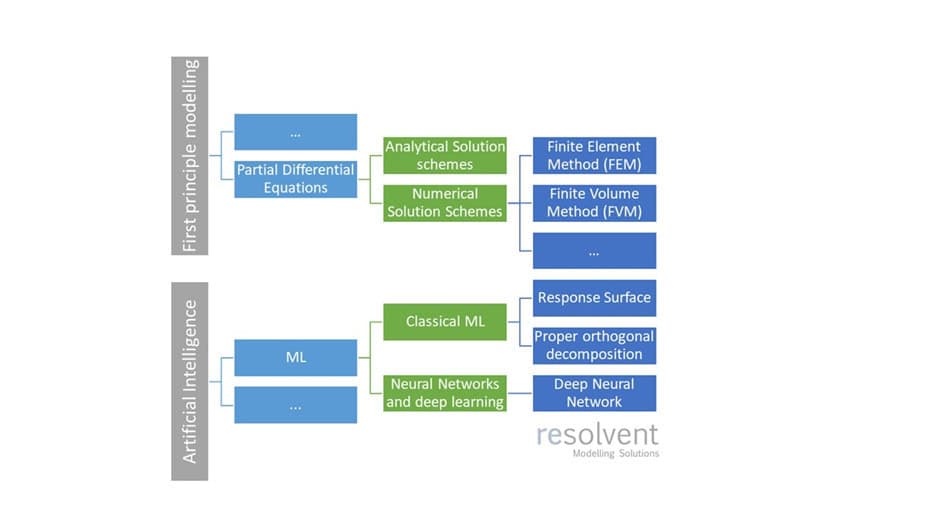

Does this work fall under the AI categories, we have previously established? Short answer: No. Longer answer: Finite Element Simulation (and all it’s relatives ie Finite Volume) are historically and method-wise not AI – instead these methods are frequently referred to as First Principle Models or Mechanistic Models and they are based on knowledge of the physical laws that govern our world.

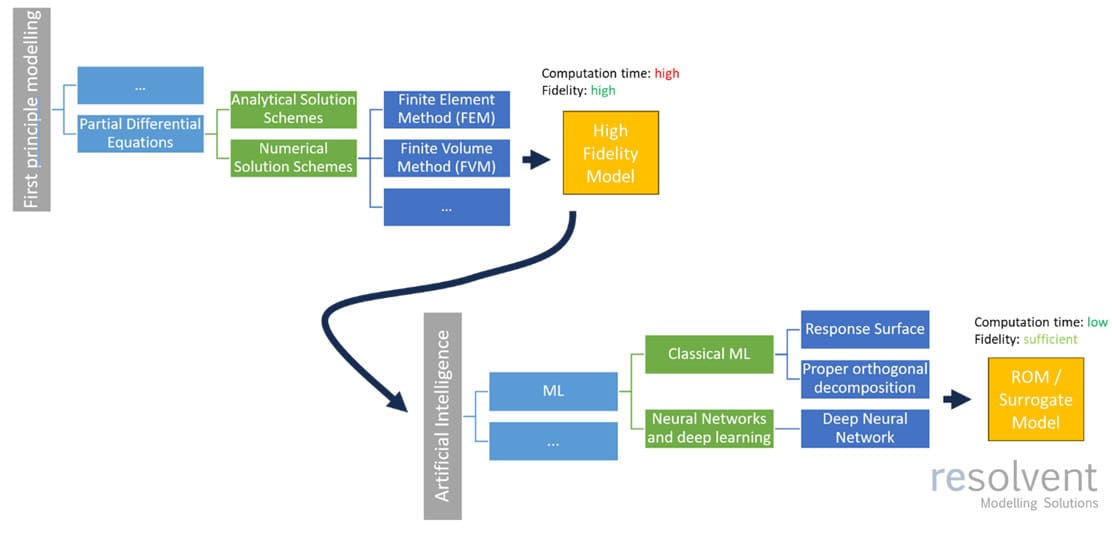

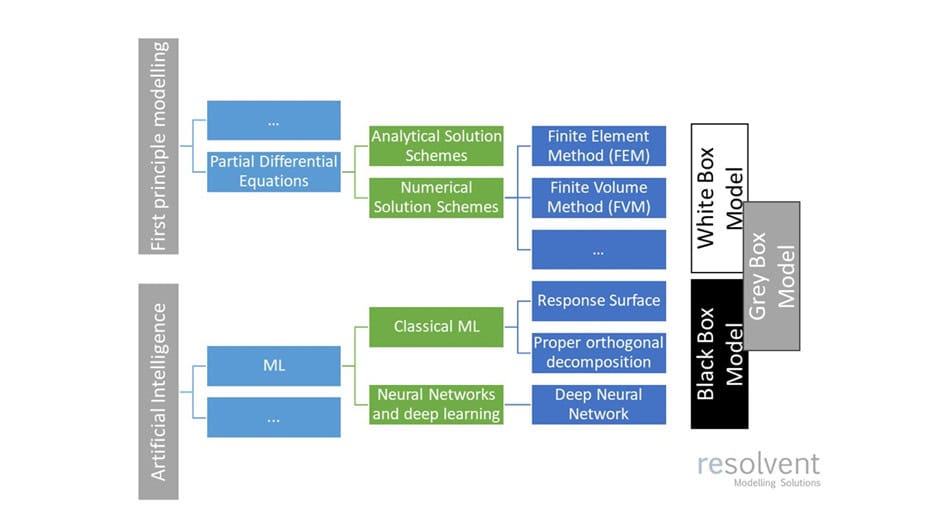

Having established that AI and First Principle Models are complementary categories, we can start placing the concepts from the initial paragraph into proper context – to do so we start by the higher level overview in Figure 8.

With this in place, we can tackle the concepts of Reduced Order– and Surrogate Modelling. Their purpose is to be computationally fast and lightweight representations of a full-fledged First Principle Model. Since a First Principle Model can take hours or days to solve, having a representation that responds in seconds or minutes with reasonable fidelity is desirable ie:

- Control applications

- System-level simulations

- Early concept screenings

- Predictive maintenance

- Digital Twins

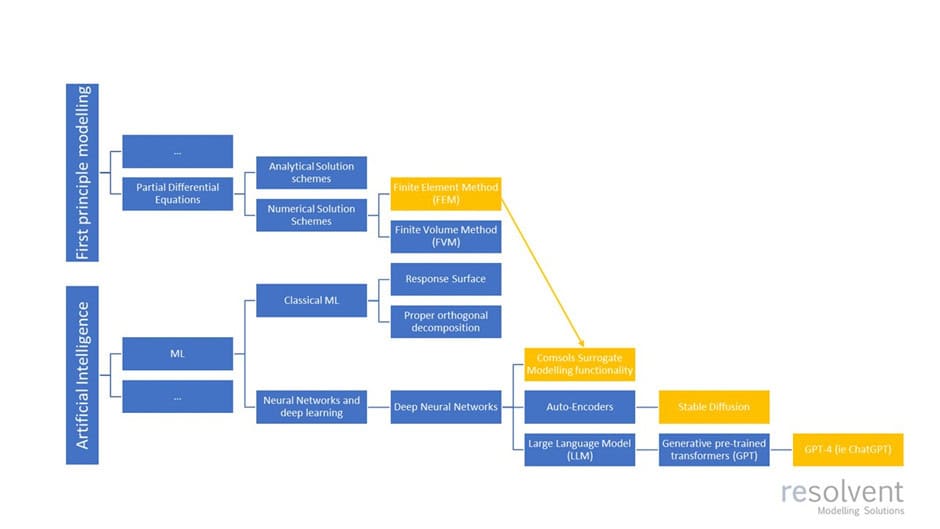

In practice the lower fidelity Reduced Order– or Surrogate Models are built “on” the outputs from a First Principle Model in various ways as illustrated in Figure 9, all involving some discipline from ML.

Note that we consider Reduced Order– and Surrogate Models synonyms for our applications.

In the context of First principle Models and AI, the terms White-, Black- and Grey-box models are also frequent. These concepts do not address the computational complexity of the model, but rather the “transparency” – thus a White box model is transparent in that its inner workings can be reasoned about, a First principle Model based on a physical law is an example. In contrast, a Black box model is opaque in that it’s inner workings are not necessarily understood – a deep Neural Network where weights are a result of fitting to training data is an example.

This is shown in Figure 11, where we have augmented Figure 8 and included the frequently used “hybrid” Grey Box Model that employs both First Principle Modelling and ML methods.

Zooming out a bit

Where does 3 of the products that we use day-to-day fit into all of this? Namely:

- Comsol Multiphysics is Finite Element Simulation tool

- And its Surrogate Modelling framework

- The Stable Diffusion image generator

- ChatGPT, which is really a chat-based interface on top of the GPT-4 model

A rough overview is given in Figure 12, well knowing that particularly Stable Diffusion and GPT-4 actually use a complex hybrid of methods from many domains – but it can be argued that their core functionality is as indicated.

Final thoughts

The previous sections have sought to place some of the most frequently encountered AI related concepts in relation to our day-to-day work in resolvent. With that in mind, it is prudent to revisit the ‘ambiguities’ listed in the beginning of this post:

- Is all AI also Machine Learning?

- No, AI also entails disciplines like Fuzzy logic and Expert based heuristic systems

- Is ChatGPT a “broader form” of AI because it appears so capable?

- No, it is still considered Weak- or Narrow AI – the ‘illusion’ of intelligence comes from the LLM’s ability to “autocomplete” the users prompts in ways that appear (and frequently are) meaningful

- What is the difference between Reduced Order Modelling and Surrogate Modelling?

- None – they are synonyms within our use-cases.

Sources

The generative AI revolution has begun—how did we get here? | Ars Technica

Machine Learning for Everyone — In simple words. With real-world examples. Yes, again — vas3k

(PDF) Concepts of Artificial Intelligence for Computer-Assisted Drug Discovery (researchgate.net)